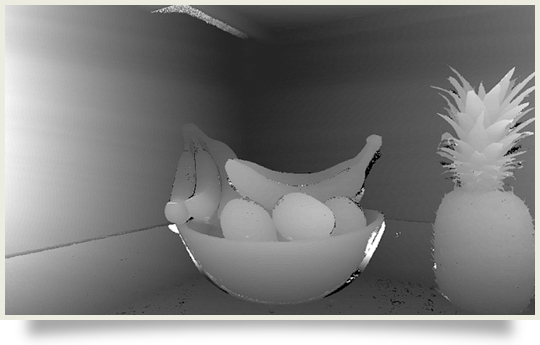

Michal Zalewski is one crazy cat I would say – he has built his own “simple and reliable” 2.5D photography rig / system. If you have ever used z-buffer data from a 3D application, or for those you you who are old school, a black-fog pass in 3D, you will certainly understand the benefit of using such an image.

“As a CS buff, I am deeply impressed by the 3D scene reconstruction research; but as a long-time photographer, I can’t help but notice that great advances in photo editing could potentially be made with much simpler tools. In particular, I am intrigued by 2.5D imaging (to borrow the term from the world of CNC machining): regular 2D pictures augmented with precise, per-pixel distance information, but no data about undercuts, backfaces, and so forth. This trick would enable you to take a photo with a relatively small aperture, and then:

- Automatically split objects into layers: accurate distance and distance continuity information could be used to very accurately isolate elements of the composition, and allow them to be selectively edited or even replaced – eliminating one of the most difficult and time-consuming steps in photo processing today.

- Set focus and compute bokeh on software level: since per-pixel distance information is available, it is possible to apply (and then tweak) selective, highly realistic blur as a function of distance, to achieve the desired aesthetic effect after all the retouching and composition changes are done. Creative bokeh effects would be also easy to achieve. Side note: a comparable goal is also being pursued with specialized sensor and aperture designs, although it is not clear if or when they would be available commercially.

- Apply volumetric effects: a number of other depth-based 2.5D filters could be conceivably created, most notably including advanced, dimensional lighting and fog.

- Still use existing 2D software: since all mainstream 2D photo manipulation programs feature extensive support for layers and grayscale masks, their existing features could be easily leveraged to intuitively work with 2.5D photography, without the need to develop proprietary editing frameworks.”

I would imagine that automatically splitting objects into layers will one day be a task done in-camera either digital still, or digital film in real time… imagine getting footage that has everything in the scene as a different layer already? Good God when can we have this?!