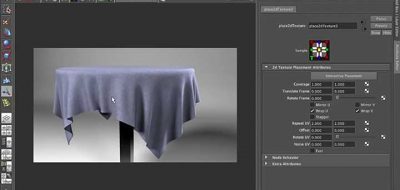

Rendering cloth is still a challenge, in that you are creating shaders and surface materials based on the view from the camera. So a sweater might look great at a single camera distance, but will fall apart from a closer field of view. Of course you can model out the actual woven intricacies of a sweater, but that isn’t very efficient for rendering. What if you can have both? What if you can have 3d fabric look like real fabric, and still retain Fiber-Scale details?

Recent fiber-based models capture the rich visual appearance of fabrics, but are too onerous to design and edit

The work being done over at Cornell University hopes to not only make rendering of fabrics more detailed, but much faster too. Just have a look at what teams have done by using something called a “Modular Flux Transfer” method for describing and rendering 3D fabric. The results are absolutely jaw-dropping.

This year’s effort is a SIGGRAPH TOG which shows how you can match micro-appearance models to real fabrics. Pramook Khungurn, Daniel Schroeder, Shuang Zhao, Steve Marschner, and Kavita Bala introduce a new appearance matching framework that will determine the parameters of a micro-appearance model.

The framework compares several types of micro-appearance models using photographs of the fabric under various lighting conditions. The system then optimizes for the parameters that best match the photographs using a method that is based on calculating derivatives at render time.

There is also work being done for fitting procedural yarn models to realistic fabric and cloth renderings that introduces an automatic fitting approach to build really realistic procedural yarn models of fabrics that have amazing fiber-level details.

Visit Cornell University’s Modeling and Rendering Fabrics at Micron-Resolution for more amazing examples of work being done to take aim at changing the way 3D fabrics are created and rendered.