It is that time of year when new, exciting and even mind-blowing technologies make their way to ACM Siggraph. One that caught my eye was a paper describing new methods for Real-time fiber-level cloth rendering, authored by Kui Wu and Cem Yuksel (University of Utah). The paper describes a real-time fiber level cloth rendering method that can be calculated on current GPU’s.

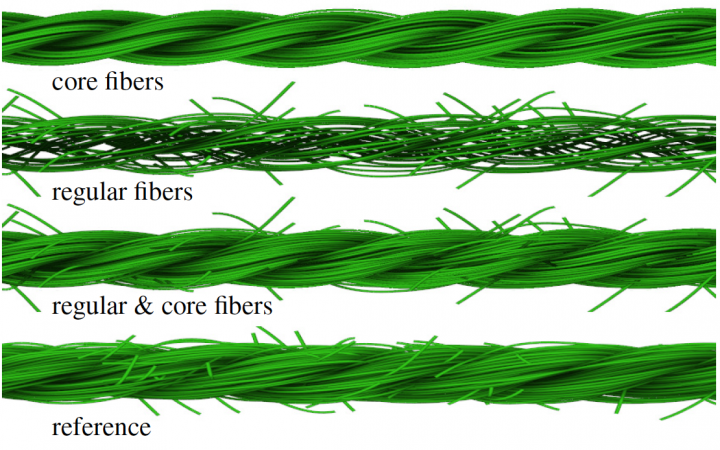

The problem it solves is that modeling cloth with realistic fiber-level geometry comes at a high cost, both with memory and computationally. Wu and Yuksel’s method procedurally generates cloth rendering down to fiber-level geometric details on the fly using yarn-level control points for minimizing the data transfer to the GPU. The system uses a level of detail strategy, adding details when they are needed. This makes it possible to zoom out to see the entire garment, and zoom in to see each individual fiber that makes up the garment.

Add yarn-level ambient occlusion and self-shadows, and the results are pretty amazing. In the examples, they are displaying knitwear that contains more than a hundred million individual fiber curves, at real-time frame rates with shadows and ambient occlusion.

Watching the presentation is nothing short of breathtaking.