A New Optical Flow Wrapping node Can Use Similarities in Textures to Fit Neutral Meshes in Wrap3

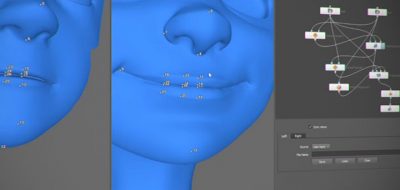

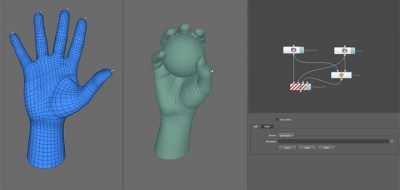

New to Wrap 3 is an Optical Flow Wrapping node. This node can fit a textured base mesh to a textured scan by looking for similarities between the two textures. The node can be used to adjust a neutral mesh to 3D scans of facial expressions. This kind of workflow is a great way to get a set of blend shapes that can you can use in production.

Check out the Wrap3 Optical Flow Wrapping tutorial that shows how you can first prepare a neutral (no facial expressions) mesh that will eventually be the subject for the OpticalFlowWrapping node.

Wrap3 has a standard wrapping node that works well when you are trying to confirm a base mesh to a high-resolution scan. When creating blendable geometry, the node will fall short as each expression scan has its own set of polygonal data. Finding a corresponding vertex position on a set of individual scans is much harder.

OpticalFlowWrapping can excel in this case. The node renders pairs of images of both models from varying angles and finds optimal flow between those image pairs. For each of the image pairs, OpticalFlowWrapping will produce a set of decisions on where to relocate each pixel. All the choices then get merged into a global solution. Pretty cool.