We’ve all used an HDR to light scenes. It’s a great way to get lighting while introducing the subtitles of tome, color and attenuation of shadow in the scene. Rendering a scene with image based lighting (IBL’s) make final renders seem better.

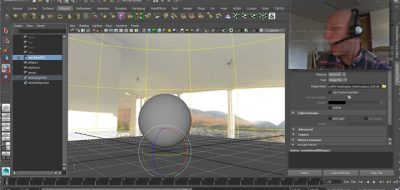

a workflow using Arnold to get image-based lighting onto texture maps so they can be layered on top of the reality captured environment

Image based lighting always seems to be based on a huge sky dome that encompasses the scene. The sky dome doesn’t really bring distance into the equation, and distance can be a very important thing for lights when rendering.

There are ways around this. I’ve seen tools that can extrapolate light sources from an HDR and solidify their relative positions in the scene. I’ve also seen this done manually too.

With Daryl Obert’s latest posting showing his “Road to VR” as he offers a couple of ways for adding distance into the HDR and IBL lighting scheme.

Daryl ends up projects the HDR onto the geometry of the scene and uses that to light the scene itself. This looks like it could be a much faster way to get the best of both worlds… Using image based lighting, AND having the lighting respect relative distances for a more accurate lighting solution. Visit the Journey to VR for more great resources.